5. I built Voice Integration into the Codex CLI — IPC 101

2025-09-06

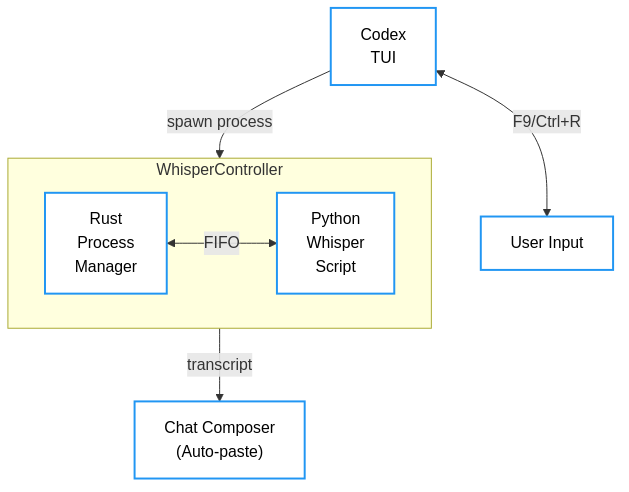

Overview

Over the summer, I became a much better typist. In short bursts, I can now reach 100 — 120 words per minute. But this is still proving not to be good enough for all the things I want to do. I want to interact with LLMs and agentic tools faster. I realized I could speak to them. So, I integrated speech-to-text into my fork of the Codex CLI.

I had been an avid user of Claude Code for a month by that point, but it is not open source. I could reverse-engineer it, but I did not want to spend an undue amount of time digging through internals. I had just begun using the Codex CLI, and because it is open source, I realized I could wire in my own speech-to-text pipeline. I had used Whisper before, and I knew that if I could take the output that Whisper emits to STDOUT, I could pipe it into the Codex CLI. This is Linux/Unix 101. Whisper Base. It misplaces punctuation and sometimes misses context, but it works well enough for most things. I knew that if I could get the Codex TUI to respond to Ctrl + R, which, at that point, it already did, and trigger a process invocation of the Whisper-base (Python) wrapper, I could source the output from the process back into the TUI chat window.

Sequence diagram

STDOUT/STDERR

I tried the straightforward approach of reading from STDOUT for transcripts and watched STDERR for warnings. The first version read STDOUT and then read STDERR. This pattern bricked the Codex CLI because it deadlocked. I did not truly understand what happened, but the child process wrote to both streams. One buffer filled while the other waited for output. I switched Codex to concurrent reading and removed the deadlock, but the transcripts still did not arrive reliably.

Noisy STDERR

STDERR flooded with ALSA, JACK, and CUDA warnings. The transcript sat somewhere in that noise, and filtering for markers felt brittle and unreliable. I stopped treating STDERR as a source for structured data.

(nodeenv2251) (3124) ➜ codex git:(integration-2025-01-09-phase2) ✗ python codex-rs/scripts/voice/whisper_cli.py

🤖 Loading Whisper model...

/home/rahul/3124/lib/python3.12/site-packages/torch/cuda/__init__.py:128: UserWarning: CUDA initialization: CUDA unknown error - this may be due to an incorrectly set up environment, e.g. changing env variable CUDA_VISIBLE_DEVICES after program start. Setting the available devices to be zero. (Triggered internally at ../c10/cuda/CUDAFunctions.cpp:108.)

return torch._C._cuda_getDeviceCount() > 0

ALSA lib confmisc.c:855:(parse_card) cannot find card 'default'

ALSA lib conf.c:5180:(_snd_config_evaluate) function snd_func_card_inum returned error: No such device

ALSA lib confmisc.c:422:(snd_func_concat) error evaluating strings

ALSA lib conf.c:5180:(_snd_config_evaluate) function snd_func_concat returned error: No such device

...

🎙️ Whisper Real-time CLI

==============================

Commands:

ENTER - Start/Stop recording

'quit' - Exit

==============================

Press ENTER to start recording (or 'quit' to exit):Named pipes

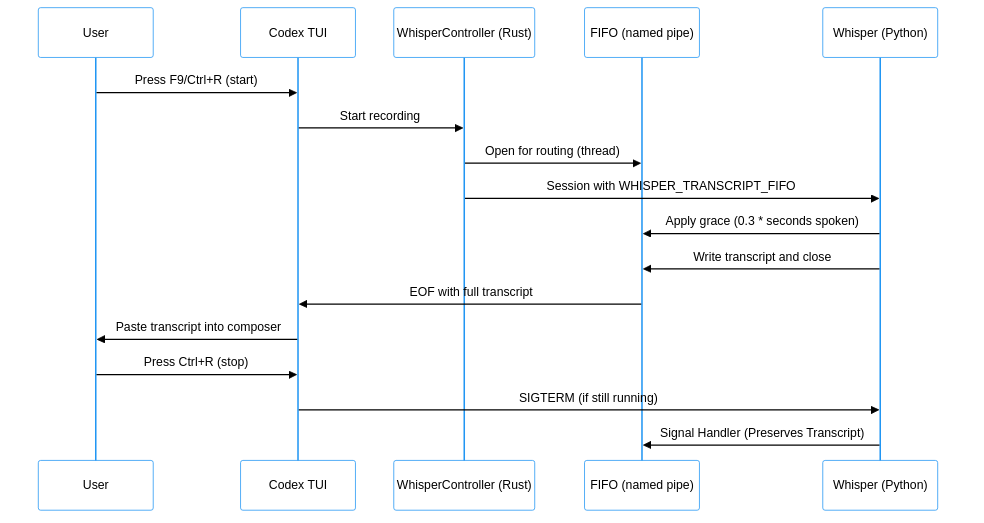

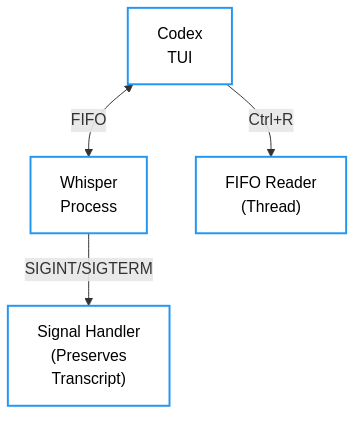

Since I couldn’t get direct reads of the STDERR and STDOUT interfaces to work as expected, I revised my thinking around pipes. Specifically, I pivoted to using a dedicated named pipe. I had never used a named pipe before and had only learned about it through my perusal of The Linux Programming Interface a few years ago. I read from the pipe until EOF and received the full transcript. I configured Whisper to write to a path from a WHISPER_TRANSCRIPT_FIFO environment variable, and I had the Rust side read that pipe.

But FIFOs block on open. The writer (Whisper) waits for a reader (Codex CLI), and the reader waits for a writer. This is actually expected behaviour since both the reader and writer execute the open() system call, which is blocking. If I spawn the Whisper process and open the FIFO for reading only after it tries to write, both sides can wait forever. This deadlocked again. I fixed this by opening the FIFO for reading before Whisper opens it for writing. I launched a reader thread first and then started Whisper. That sequence broke the circular dependency and unblocked the writer. In retrospect, I could also have used O_NONBLOCK, but I was not aware of this or had forgotten it.

This is the point at which I was able to get the Codex CLI to successfully allow speech-to-text conversion.

Failures to process longer inputs

It all worked fine until I had to process longer inputs. What would happen on longer inputs was that the stream would prematurely get cut off. If you’ve tried speaking longer paragraphs into ChatGPT’s mobile app, you’ve probably experienced this bug. As the input grows, Whisper has more work to do. If the pipe stream closes before Whisper finishes producing output, no transcript is generated. I added a wait time equal to 0.3 times the number of seconds spoken between closing the stream and when I send SIGTERM to the writer (Whisper).

I also added a signal handler on the Whisper side that finalized the transcript and wrote it to the FIFO before exiting, even on interruption. That change ensured that pressing Ctrl+R to stop did not discard the last sentence.

We found that long utterances sometimes lost their tail because I sent SIGTERM too soon after closing the audio stream. To prevent that, I widened the gap between stream close and SIGTERM. At one point, I made the gap excessively long, which removed truncation but felt slow. I then tuned it to scale with speaking time: the grace period equals 0.3 times the number of seconds spoken. This adaptive delay keeps long transcripts intact without making short recordings feel sluggish. I am evaluating whether to reduce this factor further — potentially lowering the multiplier while keeping long inputs reliable.

The Final Architecture

4. Random dictionary lookups are slower than random list lookups

2024-09-05

I recently came across an interesting LeetCode problem to compute the dot product of two sparse vectors. A sparse vector is one that is largely composed of 0’s. Such a vector could be 100s of millions of elements large, so it would be prudent, if possible, to eliminate indexes whose elements are 0. This could save significant time if in fact the vector is largely 0s. For the sake of the problem statement, you can trust this to be the case. You cannot, however, trust that the second vector is mostly 0s. Again, for the sake of the problem statement, this is sensible, because it compels an additional constraint for the problem-solver to think about — the data structure to use to represent the two vectors. You have two top choices — a hash map and an array. From the perspective of an interview, a hashmap would be completely idiomatic and reasonable. After all, hashmaps offer O(1) lookup time, right? Well, not quite. And this brings me to an interesting observation I made while solving this problem.

The Experiment

I decided to benchmark both approaches. My test involved creating sparse vectors with varying degrees of sparsity and measuring the time it took to perform random lookups using both hashmaps and arrays. The results confirmed what I had anticipated — the computational overhead of hashing would make hashmaps slower than arrays for this use case.

Here are the actual numbers from my benchmarks:

Python Results

Testing array of tuples with random lookups...

Array sum: 499982475280328

Array time: 2.83 seconds

Testing dict with random lookups...

Dict sum: 499982475280328

Dict time: 4.76 secondsC++ Results

Testing vector of pairs with random lookups...

Array sum: 499888961017249

Array time: 0.103 seconds

Testing unordered_map with random lookups...

Dict sum: 499888961017249

Dict time: 2.495 secondsThe performance difference is striking. In Python, dictionaries took about 1.7x longer than arrays. But in C++, the gap is even more dramatic — unordered_maps took roughly 24x longer than vectors! This made perfect sense when I considered the underlying mechanics of each data structure.

You can find the complete benchmark code here.

Why Arrays Are Faster

The performance difference stems from several factors I had expected. Modern CPUs are incredibly good at sequential memory access. When you iterate through an array, the CPU’s prefetcher can predict which memory locations you’ll need next and load them into cache before you even ask for them. This is especially true when you’re accessing elements in order, which is exactly what happens during a dot product calculation if you store your sparse vector as a sorted list of (index, value) pairs.

Hashmaps, by contrast, introduce multiple sources of overhead. First, there’s the hash computation itself — not free, despite what Big-O notation might suggest. Then there’s the scattered memory access pattern. Each lookup involves computing a hash, potentially dealing with collisions, and jumping to seemingly random memory locations. These random accesses can’t be prefetched effectively, leading to more cache misses. Each cache miss can cost hundreds of CPU cycles while the processor waits for data from main memory.

The Trade-offs

This doesn’t mean arrays are always better. If your vectors are extremely sparse (say, less than 0.1% non-zero elements), or if you need to perform many random lookups rather than sequential iterations, hashmaps might still win. The key insight is that Big-O notation doesn’t tell the whole story. A hashmap’s O(1) lookup has a much larger constant factor than an array’s O(1) indexed access — something I had suspected would make a material difference in practice.

For the LeetCode problem in question, I found that using a sorted array of (index, value) pairs for the sparser vector and iterating through it while looking up values in the second vector yielded the best performance. This approach minimizes the number of operations while taking advantage of the CPU’s cache-friendly sequential access patterns.

Lessons Learned

This experience reinforced an important principle: theory and practice often diverge in predictable ways if you understand the underlying hardware. What looks equivalent in Big-O notation can have vastly different performance characteristics in the real world. The interplay between algorithms and hardware is complex, and anticipating these interactions is crucial for writing truly efficient code. Sometimes, the “simpler” data structure is actually better, not despite its simplicity, but because of it.

Leave a comment

3. Demystifying MCPs: From need for an abstraction to a standard

2025-07-13

TL;DR: Model Context Protocol (MCP) solves a real need — enabling LLMs to interact with external systems—but the gap between the simple concept and the complex specification (JSON-RPC 2.0, bidirectional communication, capability negotiation) leads to partial implementations that often amount to glorified SDK wrappers rather than truly interoperable systems. While standardization offers benefits for universal tool sharing, the specification’s evolving nature and overhead mean that simpler, context-aware approaches may better serve specific use cases; ultimately, specifications like MCP represent necessary tradeoffs for collective progress in an emerging ecosystem.

MCPs have been around since November 2024, and I think most people almost get it. It’s conceptually simple to grasp since it allows you to invoke tools and operate against resources that would otherwise be inaccessible to a LLM.

There are opinions about MCPs ranging from it being an SDK wrapper to a cure-all. MCP users as a whole are generally oblivious to what makes an implementation MCP or why they probably need one. Generalizations such as calling it a USB-C for LLMs don’t help. The risks of using MCPs are understated.

LLMs are closed systems - The need for an MCP-like abstraction

At the very core of it, LLMs are just neural networks that do not have significant capability to interact with the outside world. They cannot originate HTTP requests to the Internet in real-time, or perform scheduling of invocations across a distributed system. Yet, so many of the things you would need to make based on LLM output as a LLM user are changes a LLM cannot directly make. The LLM cannot read your files, mutate or compile them. While you could copy-paste output from a LLM into respective files, this process adds up and makes you slower than you could be by multiple factors. This is where the need for an abstraction layer that could mediate request/responses to/from the LLM becomes pertinent.

Path towards a specification

Building on this idea, you could imagine how you could go from 1. unstructured LLM outputs to 2. structure in a format, say, XML, to 3. having the LLM embed instructions into responses, and, 4. having the local program execute those instructions, to, finally, 5. passing in tools and capabilities via the LLM context to receive a more relevant suggestion from the LLM.

For this demonstration, I am going to use the example of a File System Navigator: A local program that lets an LLM navigate and manipulate files, where the LLM can:

- List directory contents

- Read file contents

- Create/modify files

- Execute shell commands (with safety constraints)

Phase 1: Raw LLM Output (Copy-Paste Level)

User Input: “I want to read a file called ‘config.txt’ in my current directory”

Raw LLM Response:

Here are the commands to read config.txt depending on your system:

On Windows (Command Prompt):

type config.txt

On Windows (PowerShell):

Get-Content config.txt

On macOS/Linux (Terminal):

cat config.txt

Or if you want to view it page by page:

less config.txtYou would copy-paste this into your terminal and execute it. This works well in a lot of cases and is simple to derive and access. The issue is that the LLM has produced more output than you needed. There’s no guidance on error-handling and no way to chain operations. The LLM cannot see further results.

Phase 2: Structured XML Output — treat output as instruction

We could ask the LLM to provide the same functionality, but in a structured XML format that we can parse programmatically.

User Input:

“I want to read a file called ‘config.txt’ in my current directory. Please respond in XML format with the appropriate commands.”

Of course, if all I am going to do is copy-paste, this is not going to be any better than Phase 1. This is marginally better in situations where structure is desirable. I can write it into a file for further processing. I will also be able to associate additional metadata with the output such as command<->shell<->OS associations and where I should run it. It will then be trivial for me to use a text processor to extract the relevant bits.

<response>

<operation type="read_file">

<target>config.txt</target>

<commands>

<windows>

<cmd>type config.txt</cmd>

<powershell>Get-Content config.txt</powershell>

</windows>

<unix>

<bash>cat config.txt</bash>

<zsh>cat config.txt</zsh>

<fish>cat config.txt</fish>

<ksh>cat config.txt</ksh>

<alternative>less config.txt</alternative>

</unix>

</commands>

<error_handling>

<check_exists>

<windows>

<cmd>dir config.txt</cmd>

<powershell>Test-Path config.txt</powershell>

</windows>

<unix>

<bash>ls config.txt</bash>

<zsh>ls config.txt</zsh>

<fish>test -f config.txt</fish>

<ksh>ls config.txt</ksh>

</unix>

</check_exists>

</error_handling>

<shell_specific_notes>

<fish>Fish shell has different syntax for conditionals if you need scripting</fish>

<ksh>Korn shell supports both POSIX and legacy AT&T syntax</ksh>

</shell_specific_notes>

<description>Read the contents of config.txt file in current directory</description>

</operation>

</response>More importantly, I can have my Python program process the commands as though they were instructions and pass them to a subprocess.

def execute_powershell_command():

"""Execute a command on Windows via subprocess"""

xml = get_response_from_llm()

read_file_operation = [o for o in xml["operation"] if o["type"] == "read_file"][0]

windows_commands = [c for c in read_file_operation["commands"] if c["tag"] == "windows"][0]

command = windows_commands["powershell"]

result = subprocess.run(["powershell", "-Command", command], capture_output=True, text=True)

return {

"output": result.stdout,

"error": result.stderr,

"success": result.returncode == 0

}Phase 3: Tool Discovery via Context Injection

We could go a step further by injecting our local capabilities directly into the LLM’s context:

System Context to LLM:

SYSTEM: You are working with a local Windows machine running PowerShell.

Current environment:

- OS: Windows

- Shell: PowerShell

- Working Directory: C:\Users\username\Documents

Available PowerShell commands:

FILE OPERATIONS:

- Get-Content: Read text files

- Set-Content: Write text files

- Get-ChildItem: List directory contents

- Test-Path: Check if file/directory exists

- Copy-Item, Move-Item, Remove-Item: File management

XML OPERATIONS:

- Select-Xml: Query XML files with XPath

- [xml]$content = Get-Content file.xml: Load XML into object

- $xml.SelectNodes("//element"): Navigate XML structure

OUTPUT/DISPLAY:

- Write-Output: Display text output

- Format-Table: Display data in table format

- Out-GridView: Display data in GUI grid

- ConvertTo-Json, ConvertFrom-Json: JSON handling

DATA PROCESSING:

- Import-Csv, Export-Csv: CSV file operations

- Group-Object, Sort-Object, Where-Object: Data filtering/sorting

CUSTOM COMMANDS:

- Read-ParquetFileSafely: Read parquet files with safety limits

Syntax: Read-ParquetFileSafely -Path <filepath> [-MaxRows <int>] [-Columns <string[]>]

RESPONSE FORMAT - Always respond with XML in this exact structure:

<instructions>

<operation type="descriptive_operation_name">

<command>actual PowerShell command here</command>

<description>brief description of what this does</description>

</operation>

</instructions>

EXAMPLE:

User: "Read output.parquet files in current directory"

Response:

<instructions>

<operation type="read_file">

<command>Read-ParquetFileSafely -Path output.parquet</command>

<description>Read a parquet file</description>

</operation>

</instructions>Since this reduces ambiguity in what you might expect from the LLM, the client code will be shorter:

def read_file(command):

result = subprocess.run(["powershell", "-Command", command], capture_output=True, text=True)

print(result.stdout)

return result

# user_prompt = "Read output.parquet files in current directory"

xml = get_response_from_llm(user_prompt)

read_file_operation = [o for o in xml["instructions"] if o["type"] == "read_file"][0]

# assume the first response is the best; this is in fact how a lot of MCP clients behave today

# users would ideally have a choice between responses

command = read_file_operation["command"]

read_file(command)A Simple Schema for LLM-Tool Interaction

Based on this evolution from unstructured to structured interaction, we could design a simple protocol for LLM-tool communication. If we were to create such a protocol from scratch, it might look something like this:

<mcp-session>

<environment>

<!-- Operating system identifier (windows|linux|macos) -->

<os>string</os>

<!-- Shell/command interpreter (powershell|cmd|bash|zsh|fish) -->

<shell>string</shell>

<!-- Current working directory path -->

<working-directory>path</working-directory>

<!-- User context for capability filtering (enterprise-dev|admin|basic-user) -->

<user-context>string</user-context>

</environment>

<capabilities>

<standard-commands>

<command name="string" category="string">

<!-- Human-readable description of command functionality -->

<description>string</description>

<!-- Command syntax with parameter placeholders -->

<syntax>string</syntax>

<!-- Optional usage examples -->

<examples>

<example>string</example>

</examples>

</command>

</standard-commands>

<custom-commands>

<command name="string" category="string">

<!-- Description of custom/proprietary command -->

<description>string</description>

<!-- Syntax including custom parameters -->

<syntax>string</syntax>

<!-- Safety/performance constraints -->

<safety-constraints>

<constraint>string</constraint>

</safety-constraints>

</command>

</custom-commands>

<response-format>

<!-- XML template structure for LLM responses -->

<template>

<instructions>

<operation type="string">

<!-- Actual executable command -->

<command>string</command>

<!-- Human-readable operation description -->

<description>string</description>

<!-- Optional conditional execution -->

<conditional if="string">string</conditional>

</operation>

</instructions>

</template>

</response-format>

</capabilities>

<execution-context>

<!-- Security mode (enterprise|development|restricted) -->

<safety-mode>string</safety-mode>

<!-- Comma-separated allowed operation types -->

<allowed-operations>string</allowed-operations>

<!-- Commands blocked for security -->

<restricted-commands>string</restricted-commands>

</execution-context>

</mcp-session>This captures the essence of what any LLM-tool protocol needs:

- Environment awareness: What system are we running on?

- Capability declaration: What can the system do?

- Communication format: How should the LLM structure its responses?

- Security boundaries: What operations are allowed?

This is fundamentally what MCP does, but as we’ll see, the actual MCP specification is far more complex.

Specification vs Implementation

In software and standards, you must distinguish between specification and implementation. C++ (C++ 20, etc.) is a language specification; Visual C++, GNU C++, and Clang are implementations. TCP/IP is a specification. The Linux network stack, the Windows network stack, and the BSD network stack are implementations of the TCP/IP standard. Chrome, Firefox, Edge, Brave, etc., are implementations, in part, of ECMAScript. Analogously, MCP is a specification, and FastMCP is one of its implementations.

The Reality: What MCP Actually Requires

Now let’s see what implementing a real, interoperable standard requires. As of January 2025, the MCP standard is pretty involved. It includes many recommendations (SHOULD) and instructions (MUST) to implementers. Examples of deep specifics include how clients should tear down connections and how servers must validate Origin. Some of the more salient aspects distilled from the live specification are the following. This section contains technical details that form the crux of the post.

Core Architecture Requirements

The fundamental building blocks that define MCP’s structure:

- Client-Server Model: There must be a client and a server even if they are the same program

- JSON-RPC Foundation: The client and server must interact over JSON-RPC 2.0 and all the bells and whistles that come with it, such as serializing messages in the JSON-RPC message format

- Stateful Connections: Connections must be stateful—the client and server must negotiate their capabilities and manage stateful connections. There must be a connection initialization phase, prior to which the client may not interact with the server in any meaningful way

Capability Framework

What each side of the connection can offer:

- Server Capabilities: Servers may offer Resources, Prompts, and Tools

- Client Capabilities: Clients may offer Sampling, Roots, and Elicitation

Transport Mechanisms

How MCP moves data around:

- Transport Options: Communication may happen over streamable HTTP and STDIO, but Authorization may be implemented only over HTTP. HTTP implementations are not mandatory, but STDIO implementations are highly encouraged. Technically, the implementation may support neither and still qualify as an implementation. Implementers may also choose additional transport mechanisms such as WebSockets

- STDIO Implementation: In a STDIO implementation, the client launches the server as a subprocess. The MCP server essentially becomes a child of the client. The corresponding read/write file descriptors then become the means for exchanging communication between the client and the server

- HTTP Implementation: In the Streamable HTTP version (this used to be HTTP + SSE in earlier iterations of the MCP standard), the server must provide a single HTTP endpoint that supports POST and GET. Local servers should only bind to localhost

HTTP Protocol Details

The nitty-gritty of HTTP-based MCP:

- Client-to-Server Communication: For responses and notifications from the client to the server, every JSON-RPC message from the client must be an HTTP POST. The server must return HTTP 202 Accepted with an empty body if it accepts it

- Server-to-Client Responses: For responses to requests, the server must return

Content-Type: application/jsonnormally, andContent-Type: text/event-streamfor streaming. The client must handle both these cases. So the client must be equipped to deal with unsolicited SSEs from the server without initiating HTTP POSTs itself - Session Management: Servers may terminate sessions at any point, and clients should request session terminations if they don’t need them anymore via HTTP DELETE

Security and Authorization

How MCP handles auth when it needs to:

- OAuth Integration: Authorization is optional. An MCP server is deemed protected if it acts as an OAuth 2.1 resource server. It must be capable of responding to protected resource requests using access tokens. The client acts as an OAuth 2.1 client in this model. A separate authorization server interacts with the user to generate access tokens. The client is responsible for choosing the authorization client

Operational Features

Advanced capabilities for real-world usage:

- Progress Tracking: Implementers may implement progress tracking for long-running operations through notifications. Clients and servers may both send notifications. The sender must include a

progressTokenin the request metadata. Both parties should track active progress tokens and implement rate-limiting - Filesystem Roots: As of June 2025, the MCP allows clients to declare filesystem “roots” to servers via notifications within whose bounds servers may operate

- LLM Sampling: As of June 2025, servers may request LLM sampling. This enables clients to maintain model selection control and servers to leverage AI capabilities with no server API keys necessary. Adherence to the spec would require implementing a UI to review sampling requests, and allow users to edit prompts before they are sent to the LLM

- Model Flexibility: Servers and clients may both use different LLMs. The server and client may have access to different sets of models

Core Functionality Patterns

While the previous sections covered the infrastructure and plumbing of MCP, let’s now examine the actual functionality patterns that developers interact with. These are the four main ways MCP systems expose their capabilities to users:

- Prompts: MCP servers may expose prompts with the intention of the user having access to choose them. The server makes these available to the user via the client. The user may retrieve the list of prompts or a specific prompt respectively through a

prompts/listorprompts/getcall via the client to the server - Resources: MCP servers may expose resources (data, files, etc.) to clients, uniquely identified by URIs. Clients can invoke the

resources/listandresources/readmethods to review or get resources - Tools: MCP servers may expose tools, which are the most popular use case for MCP today, to interact with external systems—databases, filesystems, APIs, etc. Servers providing tools must expose

tools/listandtools/callmethods. A tool call may return additional context or data to inform the client - Completions: MCP implementations may provide completions like IDEs. Clients are informed about completion capabilities through

completion/complete

Why the Complexity?

Looking at our simple schema versus the MCP specification, you might wonder why all this complexity is necessary. The answer lies in the difference between solving your specific problem and creating a universal standard:

- Interoperability: Any client must work with any server

- Evolution: The protocol must handle version changes gracefully

- Security: Enterprise deployments need authentication and authorization

- Scale: From local tools to distributed systems

- Edge cases: Years of real-world usage surface countless scenarios

For full-blown MCP support you:

- MUST implement JSON-RPC 2.0 protocol

- MUST handle bidirectional communication

- MUST implement capability negotiation

- MUST support resource discovery

- MUST handle streaming for large responses

- MUST implement proper error handling with specific HTTP error codes

If you skip the complex parts of MCP (streaming, bidirectional comms, resource subscriptions, etc.) to build something simple, you’re not really implementing MCP - you’re building something else that happens to use some MCP concepts. But then you lose interoperability benefits.

FastMCP

FastMCP is probably the best known implementation of the MCP spec. It is built in Python and on top of FastAPI. Here’s how you might implement the file system navigation capabilities from our simple schema using FastMCP:

import os

import subprocess

from fastmcp import FastMCP

mcp = FastMCP("File System Navigator")

# Tool to read file contents (similar to our XML example)

@mcp.tool

def read_file(path: str) -> str:

"""Read the contents of a file."""

try:

with open(path, 'r') as f:

return f.read()

except Exception as e:

return f"Error reading file: {str(e)}"

# Tool to list directory contents

@mcp.tool

def list_directory(path: str = ".") -> list[str]:

"""List contents of a directory."""

try:

return os.listdir(path)

except Exception as e:

return [f"Error: {str(e)}"]

# Tool to execute shell commands (with safety constraints)

@mcp.tool

def execute_command(command: str, shell: str = "bash") -> dict:

"""Execute a shell command safely."""

# Safety check - only allow certain commands

allowed_commands = ["ls", "cat", "pwd", "echo", "grep", "find"]

cmd_parts = command.split()

if cmd_parts[0] not in allowed_commands:

return {"error": f"Command '{cmd_parts[0]}' not allowed"}

try:

result = subprocess.run(

command,

shell=True,

capture_output=True,

text=True,

timeout=5 # 5 second timeout

)

return {

"output": result.stdout,

"error": result.stderr,

"returncode": result.returncode

}

except Exception as e:

return {"error": str(e)}

# Resource to expose current environment info

@mcp.resource("environment://current")

def get_environment():

"""Get current environment information."""

return {

"os": os.name,

"cwd": os.getcwd(),

"shell": os.environ.get("SHELL", "unknown")

}You can peruse the documentation here.

What MCPs are not

Now that you know what it takes to define an MCP server and tools therein, it might seem reasonable to you that a lot of things you hear about MCPs are actually not true:

-

“MCPs are just SDKs or API wrappers”: While MCPs can wrap APIs and tools, they’re not really MCPs without a client and server implementing the MCP standard. MCPs don’t replace SDKs; they provide a standardized protocol for LLMs to interact with tools that SDKs expose.

-

“MCPs are a replacement for LLMs”: There’s no intelligence innately within the MCP server or client. Intelligence comes from the LLMs that use these tools, not from the MCP infrastructure itself.

-

“MCPs are the only way to interact with filesystems or invoke tools”: LLMs can interact with external systems through many mechanisms. MCPs are just one standardized approach among several options.

-

“MCPs eliminate the need for careful prompt engineering”: MCPs don’t replace the need for thoughtful context construction and prompt design. They’re tools that enhance LLM capabilities, not magic solutions.

Pitfalls of (using) MCPs and MCP anti-patterns

- MCPs eat into the LLM context window. The more MCP servers you include in the context, the less space available for context. This is a particularly tricky problem when you incorporate multiple MCP servers into your LLM’s context.

- Adding too many similar MCPs might confuse the LLM. As an example, you may incorporate both some storage MCP and the AWS DynamoDB MCP. The descriptions of the tools provided by these MCPs may be at odds and you may get suboptimal or undesirable tool selections.

- That all MCP server definitions are production-grade. In fact, this is far from the case. There is an MCP server proliferation problem much like there’s an Android app store problem or an NPM package proliferation problem. Many MCP servers are developed once and never revisited. MCP servers may have glaring security holes. So it’s very important to study the code of the MCP server before you decide to use them.

- Many MCP servers implement only a subset of the spec. MCP servers often forego much of the intricacies of what the MCP spec demands. While most implement tools, they may not implement SSEs, notifications, resources, prompts, or other capabilities outlined in the specification.

- Using MCPs when the LLM itself is guaranteed to have required capability. One example of this is if you want to have the LLM write poetry. Unless you need to give the LLM specific context that is only in sources inaccessible to the LLM, the LLM is likely to do a better job at generating poetry exclusively without your MCPs.

- Assuming MCPs will induce determinism into the LLM’s decision-making. While MCPs are great at helping sway LLM decision-making, LLMs may choose to misattribute significance to them or outright ignore them.

The State of MCP Ecosystem: A More Nuanced View

The proliferation problem mentioned in the pitfalls section warrants a closer look. I analysed 833 MCP server repositories listed on the awesome-mcp-servers directory which revealed a more complex picture than initial impressions might suggest.

Repository Activity

| Status | Count | Percentage |

|---|---|---|

| Active | 530 | 63.9% |

| Dormant | 299 | 36.1% |

| Abandoned | 0 | 0% |

I considered any repository not updated in 200 days dormant. While the dormancy rate remains concerning at 36%, the active repositories tell a different story when we look deeper into their implementations.

MCP Server Detection

| Category | Count | Percentage |

|---|---|---|

| Confirmed MCP Servers | 722 | 87.1% |

| Non-MCP Repositories | 107 | 12.9% |

Among the confirmed MCP servers, confidence levels break down as follows:

| Confidence Level | Count |

|---|---|

| High | 379 |

| Medium | 343 |

This suggests that most repositories in the directory are indeed legitimate MCP implementations, not just projects borrowing the name.

Core MCP Feature Implementation

Among the 722 confirmed MCP servers, the feature implementation tells a dramatically different story than naive analysis might suggest:

| Feature | Repositories | Percentage | Average per Repo |

|---|---|---|---|

| Tools | 722 | 100% | 5.6 |

| Resources | 100 | 13.9% | - |

| Prompts | 55 | 7.6% | - |

Every confirmed MCP server implements tools, with an average of 5.6 tools per repository. While resources and prompts show lower adoption, this likely reflects that tools are the primary use case for most MCP implementations.

Advanced Feature Adoption

| Feature | Repositories | Percentage |

|---|---|---|

| Filesystem Roots | 9 | 1.2% |

| Progress Tracking | 9 | 1.2% |

| OAuth Authentication | 26 | 3.6% |

Advanced feature adoption remains low, which could indicate either that these features aren’t needed for most use cases, or that the specification complexity discourages their implementation.

Implementation Languages

| Language | Repositories | Percentage |

|---|---|---|

| Python | 324 | 44.9% |

| JavaScript/TypeScript | 305 | 42.2% |

| Go | 45 | 6.2% |

| Rust | 14 | 1.9% |

| Other | 34 | 4.8% |

Python slightly edges out JavaScript/TypeScript, reflecting the AI/ML community’s strong adoption of MCP alongside web developers.

What This Means for Users

The good news is that most repositories claiming to be MCP servers actually are MCP servers, and they implement the core tool functionality. The average of 5.6 tools per server suggests these aren’t minimal implementations but rather functional servers addressing real use cases. You would expect this to be the case for a set of items expressly curated for a repository like awesome-mcp-servers.

However, concerns remain valid. The 36% dormancy rate still indicates maintenance issues. The low adoption of resources and prompts suggests either these features are over-specified for common needs, or developers find them too complex to implement. The minimal adoption of advanced features reinforces that most implementations stick to the basics.

Before adopting an MCP server, you should still evaluate its maintenance status, feature completeness, and security posture. But the data suggests that if you need tool-based MCP functionality, there’s a reasonable chance of finding a working implementation. For resources, prompts, or advanced features, you’re likely better off with a custom solution or one of the few comprehensive implementations.

When Might You Want Your Own Approach?

LLM engagement specs are still in their infancy. There’s no reason to think that MCPs are the ultimate standard. There’s no need to absolutely implement STDIO interaction if you don’t need it. There’s a good reason to adhere to OS-specific and shell-specific differences. There’s a good reason to use XML rather than JSON for payload formats. Organizations may choose to not use JSON RPC, but rather a proprietary standard within their organizations. This is more crucial at the enterprise level than at the startup level.

Consider a specific example on cross-platform CLI tool development that might benefit from our simpler schema. Under some circumstances it may be more desirable than full-blown MCP standard adherence:

- Shell-specific knowledge: The LLM understands that fish shell has different syntax (

(command)vs$(command)) and can generate appropriate tests. We’re able to tell the LLM through a well-defined interface what sort of a shell it is that we’re dealing with. - OS-aware operations: The LLM has better guidance with us telling it that macOS uses Homebrew, Windows uses Chocolatey, and Linux uses apt/yum

- Context-driven decisions: Can reason about platform differences (registry vs filesystem, path separators, permission models)

- Flexible tool definitions: Custom tools encode platform-specific behaviors that wouldn’t fit standard API schemas

- Use within a closed system: For specific use within a closed system such as a team or a company.

Standard MCP would require separate tool definitions for each OS/shell combination, whereas our context-driven approach lets the LLM reason about the differences and generate appropriate platform-specific commands dynamically. The schema captures the nuanced knowledge about shell and OS differences that a CLI development team needs.

Are specifications good?

The thing about specifications is that implementers are not required to implement them exactly, or even closely. Recall the browser wars from the late 90s and throughout the 00s. Web developers needed to be aware of all the tips and tricks that every browser in vogue introduced down to browser versions. Even in the post ES2015 world, this is a headache. GNU C is often described as an abomination of the C standard, but it is ubiquitous. The “best” project is often the one that is marketed the most. Sun Microsystems spent $500M in the late 90s in their attempt to dethrone C++ from the OOP and systems programming pedestal. TCP/IP beat the OSI model in implementation, but we still visualize the networking stack through the 7-layer OSI model. New patterns and paradigms enter the tech enthralling observers every so often. Systems converge towards standards — naturally or by coercion.

So, are specifications and standards worthless? Not really. MCP is evolving. It’s not complete, and by no means is it ubiquitous. LLMs are evolving and new usage patterns around LLMs are emerging every day. On the one hand, you’ve got to be practical and use what’s available and swim with the current, and on the other hand, you’ve got to go against the grain and reinvent the wheel where necessary. Specifications are the cost you pay for collective progress. They are tradeoffs.

References

- MCP Specification - The official Model Context Protocol specification

- FastMCP Documentation - Python implementation of MCP built on FastAPI

- JSON-RPC 2.0 Specification - The underlying protocol used by MCP

- Model Context Protocol Overview - Official MCP documentation and guides

- OAuth 2.1 - Authorization framework referenced in MCP security

Leave a comment

2. Setting up an Ethereum full node

2025-04-19

Extending the Austrian School

Until recently, I was a crypto skeptic and believed Bitcoin-like proof-of-work was as good as it could get in the crypto world, because it draws the closest parallels to the supply-demand dynamics of the gold, silver, and commodities markets. I was somewhat traditionally fiscally conservative and a believer in the Austrian School of Economics for a very long time. While I still believe the principles of the Austrian School will hold true in this physical Universe, I’ve extended my school of thought to explain network effects. I treat these extensions as “overrides” in this ever-so-dynamically-evolving world. I admire Ethereum as a network and have come to believe that networks are the core aspect of economics itself. Gold, Silver, Dollars, BTC, ETH—they all proliferate, but only because of networks.

Invented in 2013, Ethereum allows organizations to create smart contracts, which enable transacting entities to codify agreements directly into the blockchain. Despite its many scaling challenges, Ethereum has remained relevant, clocking in at a ~$200B market cap at the time of writing. There’s already plenty out there on Ethereum, the Austrian School, money, and economics—so I won’t dwell on that here. But at some point, I’ll write about my own interpretation of economics, substantiating my networks-are-everything belief and adding my voice to the Internet’s collective ontology.

Developer Perspective: Why Run Your Own Node?

My focus in this article is on the Ethereum blockchain—and specifically, from the point of view of a developer, not someone interested in running a node to earn staking rewards. As a developer:

- I want to communicate with the network over APIs. Being a stingy man, I’m not keen on paid full-node solutions, of which there are many (Infura, Alchemy, QuickNode).

- I like having control over the hardware and software updates.

- I’m taking this as an opportunity to dive into the internals of go-ethereum (Geth) and Prysm. When I say “full-node”, I’m deliberately excluding archive nodes. Those are a different beast, and may pose a different set of challenges. I only need a full-node for now.

Limitations with ETH APIs

From a future-focused perspective, I think the current set of Ethereum APIs isn’t exhaustive or ergonomic for power users. These APIs are designed to be foundational—and that’s fine—but it falls to operators and service providers to build composite solutions on top of them. By composite, I don’t mean L2s. In fact, I imagine this category of solutions as one that spans across layers: giving me the ability to query not just Ethereum, but also L2 chains like Base, Arbitrum, and others. What we lack today is a repertoire of composite tooling for reliable, performant, and exhaustive querying of blockchain state. What these would manifest as would be materializations, map-reduce solutions, APIs, novel storage, and observability tools on top of current APIs. I’ll talk more about extra-API extensibility in a future post.

Prysm and go-ethereum: A Developer’s Duo

A quick primer before we jump into setup instructions. Ethereum has two main components since the shift to Proof of Stake: the execution layer and the consensus layer. go-ethereum (Geth)

Install the prerequisite software to run the Ethereum and Prysm code inside Docker containers.

sudo apt-get update

sudo apt-get install ca-certificates curl gnupg

sudo install -m 0755 -d /etc/apt/keyrings

curl -fsSL https://download.docker.com/linux/debian/gpg | \

sudo gpg --dearmor -o /etc/apt/keyrings/docker.gpg

sudo chmod a+r /etc/apt/keyrings/docker.gpg

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.gpg] https://download.docker.com/linux/debian \

$(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

sudo apt-get install \

docker-ce \

docker-ce-cli \

containerd.io \

docker-buildx-plugin \

docker-compose-plugin

# Verify Installation

sudo systemctl status dockerSet up Geth and Prysm:

# Create the directories for Geth and Prysm data

mkdir -p $HOME/ethereum/geth $HOME/ethereum/prysm

# Generate JWT secret and copy it to both directories

openssl rand -hex 32 | sudo tee $HOME/ethereum/geth/jwtsecret

cp $HOME/ethereum/geth/jwtsecret $HOME/ethereum/prysm/jwtsecret

# Set correct permissions for JWT secret files

chmod 644 $HOME/ethereum/geth/jwtsecret

chmod 644 $HOME/ethereum/prysm/jwtsecret

# Create Docker network for both containers

sudo docker network create eth-net

# Stop and remove existing containers if they exist

sudo docker stop eth-node prysm || true

sudo docker rm eth-node prysm || trueRun the eth-node and Prysm containers:

sudo docker run -d --name eth-node --network eth-net --restart unless-stopped \

-p 8545:8545 -p 8551:8551 -p 30303:30303 -p 30303:30303/udp \

-v $HOME/ethereum/geth:/root/.ethereum \

ethereum/client-go \

--syncmode "snap" \

--http \

--http.addr 0.0.0.0 \

--http.port 8545 \

--authrpc.port 8551 \

--authrpc.addr 0.0.0.0 \

--authrpc.vhosts="eth-node"

# Wait for Geth to start up

sleep 30

# Run Prysm with appropriate settings

sudo docker run -d --name prysm --network eth-net --restart unless-stopped \

-v $HOME/ethereum/prysm:/data \

-p 4000:4000 -p 13000:13000 -p 12000:12000/udp \

gcr.io/prysmaticlabs/prysm/beacon-chain:latest \

--datadir=/data \

--jwt-secret=/data/jwtsecret \

--accept-terms-of-use \

--execution-endpoint=http://eth-node:8551

# Check if containers are up and running

echo "Checking container status:"

sudo docker ps --format "table {{.Names}}\t{{.Status}}"

# Check Geth's logs for confirmation of HTTP server start

echo "Geth's HTTP server status:"

sudo docker logs eth-node | grep "HTTP server started"

# Check Prysm's logs for connection confirmation

echo "Prysm's connection status:"

sudo docker logs prysm | grep "Connected to new endpoint"

# Monitor both logs for any errors or warnings

echo "Monitoring Geth logs for errors:"

sudo docker logs eth-node -f &

echo "Monitoring Prysm logs for errors:"

sudo docker logs prysm -f &Leave a comment

1. Time complexity of len() in CPython

2024-03-01

The len() function in CPython returns the length of the container argument passed to it.

len() responds to all containers. Comments on the Internet claim the time-complexity of the

len() function is O(1), but they fail to substantiate. This is not very

well-documented officially either and leaves room open for disagreement.

I’ve been on interview debriefs where interviewers have confidently claimed the

time-complexity to be O(n), which, of course, is wrong. The time-complexity of len() is

indeed O(1), always.

Under the hood, CPython is a C program (hence the name CPython for this version of Python. There’s also Jython, which, as you can guess, is Python on the JVM, which, apart from subscribing to Python language spec, has little to do with the CPython implementation or the runtime.):

int

Py_Main(int argc, wchar_t **argv)

{

…

return pymain_main(&args);

}All native Python objects including containers have representations and behaviours defined by C

structs and C functions respectively. listobject.h, for instance, is the header file for the

CPython list object. All objects in CPython are of type PyObject. The length of an object is

represented by its Py*_Size() function. For the list object, that’s PyList_Size().

Py_ssize_t

PyList_Size(PyObject *op)

{

…

return PyList_GET_SIZE(op);

}The PyList_Size() function merely calls its super function Py_SIZE() function defined

in object.h where it performs a look-up of the attribute, ob_size, on the generic

PyVarObject.

static inline Py_ssize_t Py_SIZE(PyObject *ob) {

…

return var_ob->ob_size;

}We can see from the definition of PyVarObject that ob_size is a Py_ssize_t object

which under most conditions is a wrapper around ssize_t.

We can see from the definition of PyVarObject that ob_size is a Py_ssize_t object

which under most conditions is a wrapper around ssize_t.

typedef struct {

PyObject ob_base;

Py_ssize_t ob_size; /* Number of items in variable part */

} PyVarObject;The determination of size/length is a look-up and an O(1) operation for lists

(including strings).

The time-complexity analysis for PyDict_Size is similar.

Py_ssize_t

PyDict_Size(PyObject *mp)

{

…

return ((PyDictObject *)mp)->ma_used;

}ma_used, which represents the number of items in the dict, is incremented

every time a new item is added.

static int

insertdict(PyInterpreterState *interp, PyDictObject *mp,

PyObject *key, Py_hash_t hash, PyObject *value)

{

…

mp->ma_used++;

…Similarly, item deletions decrement ma_used.

static int

delitem_common(PyDictObject *mp, Py_hash_t hash, Py_ssize_t ix,

PyObject *old_value, uint64_t new_version)

{

…

mp->ma_used--;

…So, len() invoked with dict objects too is an O(1) operation. You can perform similar

exercises with sets, tuples, bytes, bytearrays. Length lookups are always O(1).

Leave a comment